The KVM hypervisor is a virtualization system included with the Linux kernel. Along with XEN is one of the most attractive virtualization platforms based on Linux.

KVM offers several advantages over the more user-friendly, VirtualBox. Since it is integrated into the mainstream Linux kernel, it boasts significant performance benefits [1]. Furthermore it is better suited as a virtualization platform solution while VirtualBox is better suited for short-term tests and casual, user owned, VMs. KVM supports many guest operating systems so you can use Linux, Unix, Windows or something more exotic.

Install KVM on your system

- Make sure your system supports KVM.

KVM is only supported on systems with Hardware-Assisted Virtualization. If your system does not support HAV you can revert to QEMU, a system which KVM is based on.

-

First install CPU checker:

$ sudo apt-get -y install cpu-checker - Check if KVM is supported:

$ kvm-ok INFO: /dev/kvm exists KVM acceleration can be usedLooks OK. Still you may need to check your BIOS/EFI whether this feature is enabled.

-

Install KVM:

$ sudo apt-get -y install qemu-kvm

KVM Basic Usage

You can use KVM directly. This method is suitable for testing or troubleshooting but not appropriate for production VMs.

- Create a disk image:

$ qemu-img create -f qcow2 testvm.qcow2 20G Formatting 'testvm.qcow2', fmt=qcow2 size=21474836480 encryption=off cluster_size=65536 lazy_refcounts=offThe qcow2 format grows dynamically so it does not really occupy 20GB:

$ ls -lh testvm.qcow2 -rw-r--r-- 1 theo theo 193K Μάι 14 18:43 testvm.qcow2 - Start a VM instance, to setup your system:

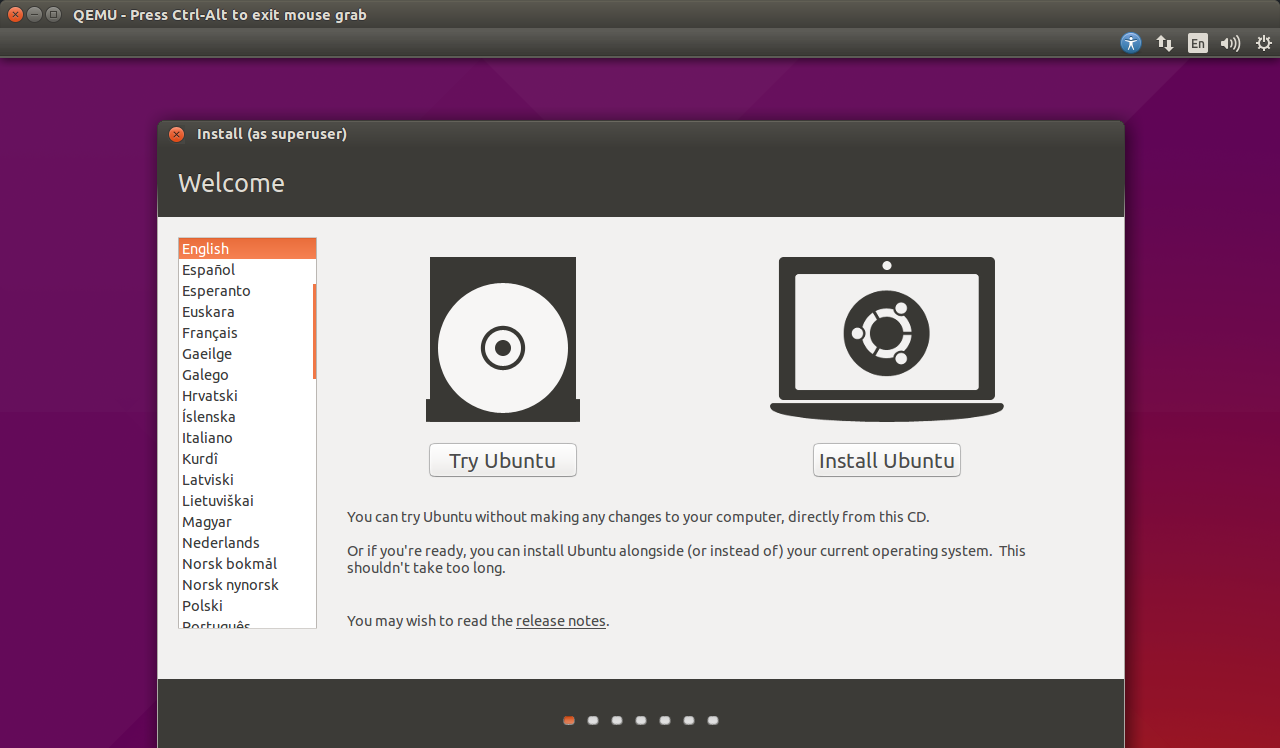

$ kvm -m 1024 -hda testvm.qcow2 -cdrom ~/Downloads/ubuntu-15.04-desktop-amd64.iso -boot d -smp 2The options are explained below:

- -m: memory in MB

- -hda: first disk image to use

- -cdrom: you can use an .iso file (ubuntu-15.04-desktop-amd64.iso) or a physical CD-ROM (/dev/sr0).

- -boot: choose where to boot from. A parameter of d tells KVM to use the cdrom for booting.

- -smp: Stands for Symmetric Multiprocessing. 2 is the number of CPUs available to the VM.

After you run the command above you will get a window with your VM running in it:

This window will capture your mouse and keyboard when you work in it. If you want to return to your host OS just press Ctrl-Alt together and they will both be released.

-

Run your VM.

After the installation is finished you can run your VM from the disk image.

- First let’s check the size of your disk:

$ ls -lh testvm.qcow2 -rw-r--r-- 1 theodotos theodotos 5,9G Μάι 14 19:33 testvm.qcow2

So after the installation of Ubuntu Desktop 15.04 (Vivid Vervet) the disk image has grown to 5.9GB.

- Run the VM from the disk image:

$ kvm -m 1024 -hda testvm.qcow2 -smp 2

A new window will pop up with the freshly installed OS.

- First let’s check the size of your disk:

Running KVM under libvirt

The libvirt system, is a platform for running VMs under many different hypervisors using a common API and toolset. It supports KVM, XEN, QEMU, VirtualBox and many others. This is the preferred method of using KVM because the VMs are globally available to privileged (local and remote) users, it facilitates VM management and you can configure autostart and many other features.

- Setting up libvirt:

$ sudo apt-get -y install libvirt-bin - Give appropriate permissions to the users expected to manage your VMs:

$ sudo usermod -a -G libvirtd theoThe theo user will be added as a member in the libvirtd group. After that you will need to log-out, for the permission to be activated.

Creating a libvirt ready VM

There are many tools to create VMs for libvirt. In this section we are going to examine two of them: virt-install and uvtool.

- Using virt-install.

The advantage of virt-install is being distro agnostic. That means you can use it to install Debian, Ubuntu, RHEL, CentOS, Fedora, SUSE and many other distros as well.

- Install virt-install:

$ sudo apt-get -y install virtinst - Create a machine:

$ sudo virt-install -n testvm -r 512 --disk path=/var/lib/libvirt/images/testvm.img,bus=virtio,size=4 -c ~/Downloads/ubuntu-14.04.2-server-amd64.iso --network network=default,model=virtio --graphics vnc,listen=127.0.0.1 --noautoconsole -v Starting install... Allocating 'testvm.img' | 4.0 GB 00:00 Creating domain... | 0 B 00:01 Domain installation still in progress. You can reconnect to the console to complete the installation process.- -n: VM name

- -r: RAM in MB

- –disk: Path for the virtual disk.

- -c: defive the .iso file or CDROM device to use for the OS installation.

- –network: Select your preferred networking mode.

- –graphics: Select the graphics protocol. We are using VNC here that allows connections only from localhost. You can use the 0.0.0.0 (any) instead if 127.0.0.1 IP to allow connections from elsewhere.

- –noautoconsole: do not run the guest console.

- Install virt-install:

- Connect to the VM and setup the guest OS:

$ xtightvncviewer 127.0.0.1The VNC client will connect to the default VNC port which is 5900. You can append ::<port> to the hostname or IP address if you want to use a different port, e.g.

xtightvncviewer 127.0.0.1::5901NOTE: If xtightvncviewer is not installed you can install it with

sudo apt-get install xtightvncviewer. You can also use a graphical VNC client like Remmina.

Verify that the machine is created:

$ virsh list --all

Id Name State

----------------------------------------------------

- testvm shut off

The machine will appear as shut off after the OS setup finishes.

Start the VM:

$ virsh start testvm

Domain testvm started

$ virsh list

Id Name State

----------------------------------------

3 testvm running

- Install a VM using uvtool:

The uvtool is a tool to create minimal VMs. Unlike virt-install you can create only Ubuntu VMs but the overall setup is taken care by uvtool.

- Install uvtool:

$ sudo apt-get -y install uvtool uvtool-libvirt - Create a local repository of ubuntu-cloud images:

$ uvt-simplestreams-libvirt sync release=trusty arch=amd64

This command will download the trusty (14.04) release locally.

- Query for local repository

$ uvt-simplestreams-libvirt query release=trusty arch=amd64 label=release (20150506) - Generate an ssh key pair (unless you already have one):

$ ssh-keygen -b 4096 - Create a uvt based VM:

$ uvt-kvm create --cpu 2 --memory=1024 --disk=10 testuvt

This will create a trusty VM with 2 CPUs, 1GB RAM and 10 GB disk.

- Install uvtool:

-

Verify the machine creation:

$ virsh list Id Name State ---------------------------------------------------- 5 testuvt running - Connect to your VM:

$ uvt-kvm ssh testuvt --insecureYou can get root access, on the VM, with

sudo -i.

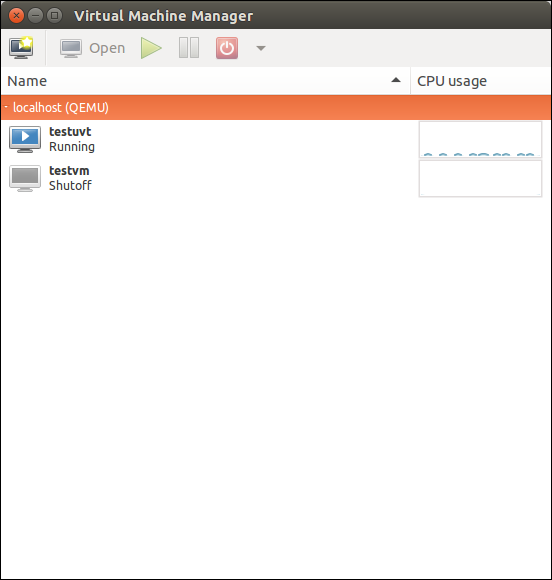

Managing libvirt using the graphical Virtual Machine Manager

Virtual Machine Manager is a front-end to libvirt. It help system administrators managing their VMs using a convenient graphical interface.

- Installing Virtual Machine Manager:

$ sudo apt-get -y install virt-manager - Running Virtual Machine Manager:

-

You can find it in the application menu or run

virt-managerfrom the command line.

As you can see the two VMs we created earlier, are already there.

-

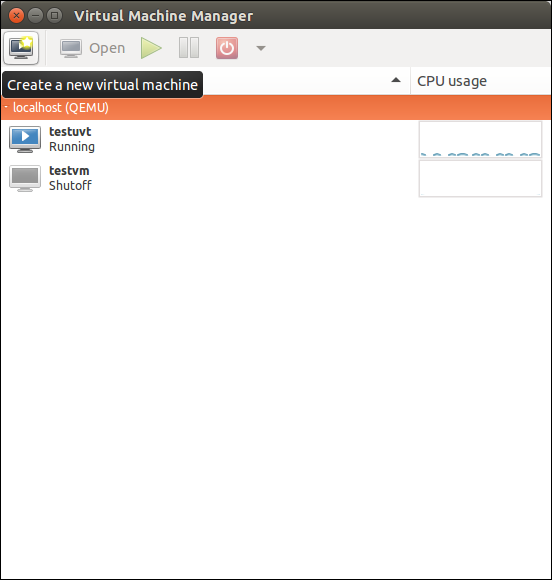

Creating a new machine.

- Press the Create a new machine icon:

- Press the Create a new machine icon:

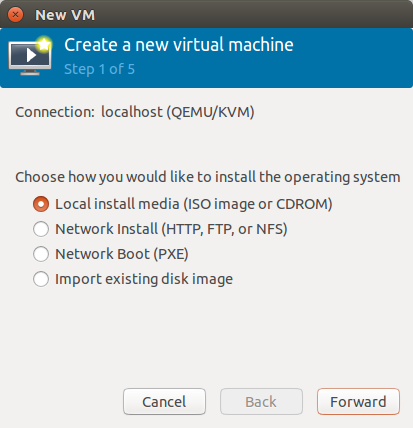

- New VM options.

- Select one of the following option to continue:

Each option provides different steps. You may need to read the documentation for all the details. The first option is the most straight forward.

Managing your VMs with virsh

- Listing machines.

-

List only running machines:

$ virsh list Id Name State ---------------------------------------------------- 5 testuvt running - List all machines:

$ virsh list --all Id Name State ---------------------------------------------------- 5 testuvt running - testvm shut off

- Starting machines:

$ virsh start testvm Domain testvm started - Shutdown machines:

$ virsh shutdown testvm Domain testvm is being shutdown - Restart machines:

$ virsh reboot testuvt Domain testuvt is being rebooted - Set machines to autostart:

- Enable autostart:

$ virsh autostart testuvt Domain testuvt marked as autostarted - Disable autostart

$ virsh autostart --disable testuvt Domain testuvt unmarked as autostarted

- Enable autostart:

- Other useful virsh commands:

- console: get console access to a VM.

- destroy: destroy (delete) a machine.

- dominfo: get the machine details.

- migrate: migrate a machine to another libvirt host.

- save: save the machine state.

- snapshot-create: create a snapshot of the machine.

To see all the supported commands you can run

virsh --help.

Learning to use libvirt is of great value to a Linux sysadmin because the same commands apply for KVM, XEN, VirtualBox, even container systems like OpenVZ and LXC.

References

- [1] http://www.phoronix.com/scan.php?page=article&item=ubuntu_1404_kvmbox&num=5

- [2] https://help.ubuntu.com/14.04/serverguide/virtualization.html

- [3] https://help.ubuntu.com/community/KVM</port>